At the

TC Camp unconference earlier this year, I participated in the discussion on review processes and tools. I’ve been interested in this topic for a while (here’s an

article from 6 years ago). The session was pretty sobering. There have been a lot of great ideas, and even some good tools, for conducting review over the past 10 or 12 years. But in the final analysis, it seems that no one had been successful implementing

and sustaining any of these approaches. SMEs, in general, seem to not be willing to incur any overhead whatsoever in the quest after good reviews. Overhead means adopting any new tools, certainly, especially those that can’t be used offline, but also even having to give a moment’s thought to the process.

So how can information developers move past the ad hoc approach to reviewing? Clearly, we need to provide a familiar experience so SMEs don’t have to think about it. The unpleasant conclusion is that it should be based on a common authoring and review process such as Google Docs or (gasp!) Word+Sharepoint. Despite the contempt we feel for Word, my team is asked constantly to provide content in these forms for review. So, if you can’t beat ’em, join ’em.

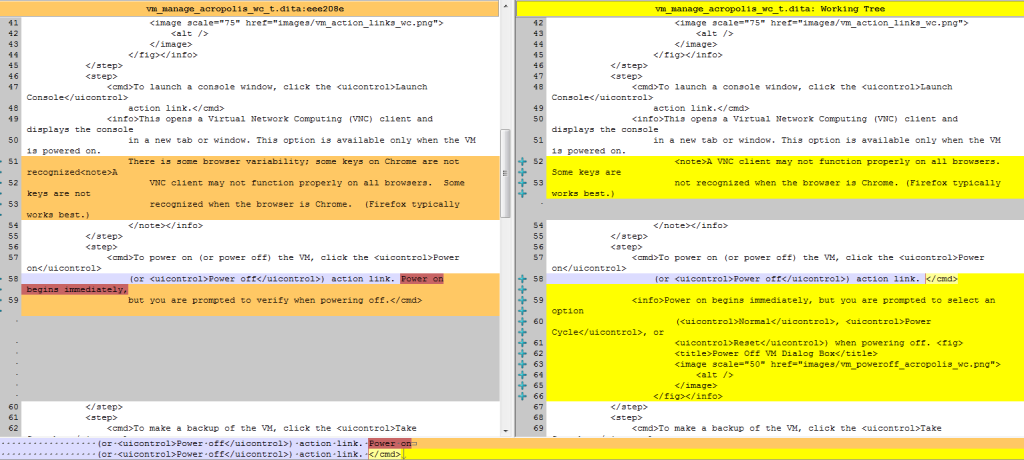

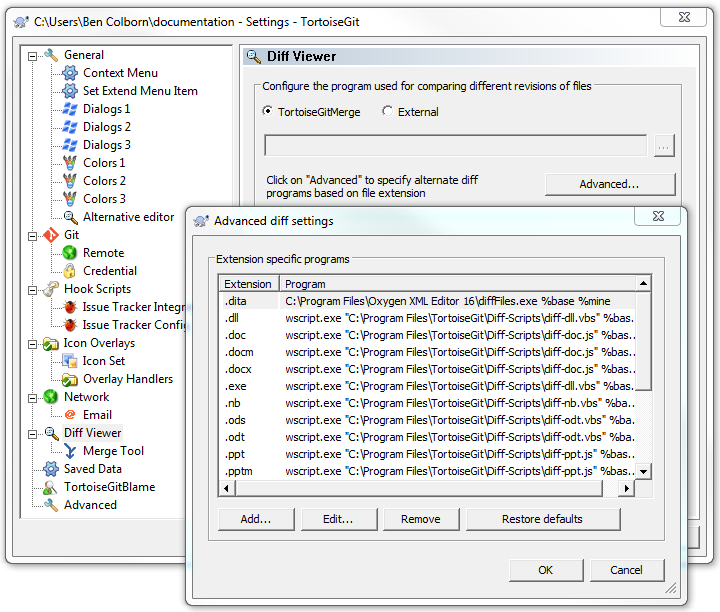

Since my company is in the final stages of moving from Google to Office 365, we decided to look at Word as an option, even though in my opinion the Google Docs review experience is superior. The RTF transformation in the DITA Open Toolkit has been broken for as long as I can remember. In the past I’ve used HTML as an intermediate step for going from DITA to Word, but it has a lot of styling issues.

Then I found that Word does a pretty handy conversion from PDF. Just open the PDF from Word, and there it is. Although the formatting isn’t good enough for production use maybe, it’s plenty good enough for review. One gap is that cross-references aren’t preserved, but that’s not a show-stopper for review.

It’s hard to overstate the revulsion I feel in using PDF as an interchange format. How ridiculous is it to strip out all the hard-won semantics from the source content, then heuristically bring back a poor imitation of that information? But I’m no Randian hero, just a guy trying to get stuff done.

Automation

Ok, so it’s sort of interesting that you can convert PDF to Word just by opening it up and saving it, but that’s a manual process. What about automating the conversion?

Naturally PowerShell is the answer. The full script is a bit longer, but here are the important parts.

$wordApp = New-Object –ComObject Word.Application

$wordApp.Visible = $False

$pdfFile = (Resolve-Path $pdfFile).Path

$wordDoc = $wordApp.Documents.Open($pdfFile, $false) # do not confirm conversion, open read-only

Write-Host "Opened PDF file $pdfFile"

$wordFile = $pdfFile.Replace("pdf", "docx")

$wordDoc.SaveAs([ref]$wordFile,[ref]$SaveFormat::wdFormatDocument)

Write-Host "Saved Word file as $wordFile"

You’ll want to wrap opening the PDF file and saving the DOCX file in a try/catch block, but that’s about it. My version also adds a “Draft” watermark to the Word file.

Troubleshooting

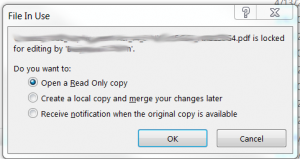

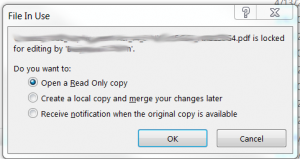

One issue I found was that sometimes the file wouldn’t close properly. The next time I tried to convert the same file, a warning like this would pop up. The solution was to go to the Task Manager and kill WINWORD.EXE.

One issue I found was that sometimes the file wouldn’t close properly. The next time I tried to convert the same file, a warning like this would pop up. The solution was to go to the Task Manager and kill WINWORD.EXE.

Next steps

Using this utility is a two-step process. The first thing I’d like to do is create an ant target that would generate a PDF from DITA source then immediately convert it to PDF.

Once that’s done, the desired end state would be to do regular automated builds and upload to Sharepoint. The trick will be to merge comments, but that seems to be possible with PowerShell. Although I haven’t fully investigated the approach described here, it looks promising.